I used to write up quite a few of these nice interesting articles that talks about the giants and the extreme bodies that have existed throughout human history. This will be a continuation of those series. So who is the tallest women in history?

I used to write up quite a few of these nice interesting articles that talks about the giants and the extreme bodies that have existed throughout human history. This will be a continuation of those series. So who is the tallest women in history?

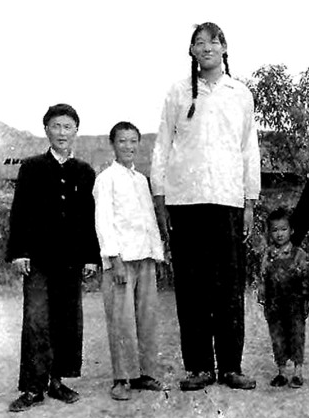

Barring any mythical legends of lost tribes and civilizations, the tallest women in recorded history is Zeng Jinlian who doctors have calculated was 8 feet 1.75 inches (248.3 cm) tall if her severe spinal curvature was adjusted to be fully straight. That would make her the only female that has passed the 8 feet tall mark.

From the Tallest Man website HERE, the story of her life.

Zeng Jinlian (June 26, 1964 – February 13, 1982) was the tallest female ever recorded in medical history, taking Jane Bunford’s record. She is also the only female counted among the 16 individuals in medical history who reached a verified eight feet or more. At the time of her death at the age of 17, in Hunan, China, she was 8 ft 1.75 in (248.3 cm) tall. However, she could not stand up straight due to a severely deformed spine.

Nevertheless, she was the tallest person in the world at the time. In the year between the death of 8 feet 2 inch Don Koehler and her own, she surpassed fellow ‘eight-footers’ Gabriel Estavao Monjane and Suleiman Ali Nashnush. She was the second woman in recorded history to become the tallest person in the world. Jane Bunford was the first.

From Wikipedia HERE…

Zeng Jinlian (simplified Chinese: 曾金莲; traditional Chinese: 曾金蓮; pinyin: Zēng Jīnlián; June 25, 1965 – February 13, 1982) was the tallest woman ever verified in medical history, taking over Jane Bunford’s record. Zeng is also the only woman counted among the fourteen people who have reached verified heights of eight feet or more.

At the time of her death at the age of 17 in Hunan, China, Zeng, who would have been 8 feet, 1.75 inches (249 cm.) tall (she could not stand straight because of a severely deformed spine), was the tallest person in the world. In the year between Don Koehler’s death and her own, she surpassed fellow “eight-footers” Gabriel Estêvão Monjane and Suleiman Ali Nashnush. That Zeng’s growth patterns mirrored those of Robert Wadlow is shown in the table below.

| Age | Height of Zeng Jinlian | Height of Robert Wadlow |

|---|---|---|

| 4 | 5 feet 1.5 inches | 5 feet 4 inches |

| 13 | 7 feet 1.5 inches | 7 feet 4 inches |

| 16 | 7 feet 10.5 inches | 7 feet 10.5 inches |

| 17 | 8 feet 1.75 inches | 8 feet 1.5 inches |

As for the tallest women today who is still alive, that would go to Yao Defen, who stands at somewhere between 7′ 8″ and 7′ 9″, depending on which resource you wanted to use.

From the Wikipedia article HERE….

Yao Defen (Chinese: 姚德芬; pinyin: Yáo Défēn) of China, (born July 15, 1972) is the tallest living woman, as recognized byGuinness World Records.[2] She stands 7 ft 8 in tall (2.33 m), weighs 200 kilograms (440 lb), and has size 26 (UK) / 78 (EU) feet.[3][4][5] Her gigantism is due to a tumor in her pituitary gland.

Yao Defen (Chinese: 姚德芬; pinyin: Yáo Défēn) of China, (born July 15, 1972) is the tallest living woman, as recognized byGuinness World Records.[2] She stands 7 ft 8 in tall (2.33 m), weighs 200 kilograms (440 lb), and has size 26 (UK) / 78 (EU) feet.[3][4][5] Her gigantism is due to a tumor in her pituitary gland.

Yao Defen was born to poor farmers in the town of Liuan in the Anhui province of Shucheng County. At birth she weighed 6.16 pounds. At the age of three years she was eating more than three times the amount of food that other three-year-old children were eating. When she was eleven years old she was about six feet, two inches tall. She was six feet nine inches tall by the age of fifteen years.[edit]Early life

The story of this “woman giant” began to spread rapidly after she went to see a doctor at the age of fifteen years for an illness. Medical doctor(who also saw her after years) properly diagnosed the illness, however decided not to cure her, because she and her family didn’t had 4000 yuan for the surgery(despite the official communism and socialism claims People’s Republic of China don’t have(and didn’t had) “free” or “social” public health service, except for high government officials). [6]After that, many companies attempted to train her to be a sports star. The plans were abandoned, however, because Defen was too weak. Because she is illiterate, since 1992 Yao Defen has been forced to earn a living by traveling with her father and performing.

Yao Defen’s giant stature was caused by a large tumor in the pituitary gland of her brain, which was releasing too much growth hormone and caused excessive growth in her bones. Six years ago, a hospital in Guangzhou Province removed the tumor, and she stopped growing.

The tumor returned and she was treated in Shanghai in 2007, but was sent home for six months with the hope that medication would reduce her tumor enough to allow surgery. The second surgery was never performed due to lack of funds.

In 2009, the TLC cable TV network devoted a whole night’s show to her. She suffered from a fall in her home and had internal bleeding of the brain. She recovered and felt some happiness after a visit from China’s tallest man, Zhang Juncai.

Medical help

A British television program filmed a documentary on her and helped raise money so she could get proper medical care. They measured her and according to the documentary she is seven feet, eight inches tall. Two leading doctors in acromegaly agreed to help Yao. She was taken to a nearby city hospital, where imaging procedures revealed that a small portion of her tumor, thought to have been removed many years before, still remained, causing continuing problems including weakening vision as it pressed against her optic nerve. She returned home, then was admitted for a month under observation in the larger Shanghai Ruijin Hospital, and given dietary supplements. In that hospital, her growth hormone was greatly slowed down, although it is still a problem. Upon her return home to her mother and brother, she was able to walk with crutches, unassisted by others, and was given a six-month supply of medicines and supplements in hopes of improving her condition enough to undergo surgery.

Acromegaly

Yao currently suffers from hypertension, heart disease, poor nutrition, and osteoporosis. Acromegaly often results from a tumor within the pituitary gland that causes excess growth hormone secretion. As a result, the body’s features become enlarged. It can also delay the onset of puberty as is the case with Yao. She has nosecondary sexual characteristics. Potential complication lacking surgery includes blindness and eventually premature death.

She lives near her mother (who is only four feet, eight inches tall) in a small village in rural China.

From the Tallest Man website HERE…

Yao Defen – 7 feet 8 inches (233.7 cm)

Yao Defen of China, (born 15 July 1972) claims to be the tallest woman in the world. The Guinness Book of World Records listed the American giantess Sandy Allen was the world’s tallest woman until her death on 13 August 2008, but disputed Yao Defen’s claim until the 2011 edition of the Guinness World Records. She weighs 200 kg (440 lbs) and has size 57 (EU) (around 20 US) feet. Her gigantism is due to a tumor in her pituitary gland.

Yao Defen – Early lifeYao Defen was born to poor farmers in the town of Liuan in the Anhui province of Shucheng County. At birth she weighed 6.16 pounds. At age 3 she was eating more than three times the amount of food that other three-year-olds were eating. When she was 11 years old she was about 6 foot 2 inches tall. She was 6 foot 8 inches tall by the age of 15. The story of this “woman giant” began to spread rapidly after she went to see a doctor at age 15 for an illness. After that, many companies attempted to train her to be a sports star. The plans were abandoned, however, because Yao Defen was too weak. Because she is illiterate, since 1992 Yao Defen has been forced to earn a living by traveling with her father and performing. Yao Defen – Pituitary GlandYao Defen’s giant stature was caused by a large tumor in the pituitary gland of her brain, which was releasing too much growth hormone and caused excessive growth in her bones. Six years ago, a hospital in Guangzhou Province removed the tumor, and she stopped growing. The tumor returned and she was treated in Shanghai in 2007, but was sent home for 6 months with the hope that medication would reduce her tumor enough to allow surgery. It remains unknown if the second surgery was ever performed. Yao Defen – measured at 7 feet 9 inches tall?A British television programme filmed a documentary on her and helped raise money so she could get proper medical care. They did measure her and according to the documentary she even was 7 feet 9 inches tall. Two leading doctors in acromegaly agreed to help Yao. She was taken to a nearby city hospital, where imaging procedures revealed that a small portion of her tumor, removed many years before, still remained, causing continuing problems, including weakening vision as it pressed against her optic nerve. She returned home, then was admitted for a month under observation in the larger Shanghai Ruijin Hospital, and given dietary supplements. In that hospital, her growth hormone was greatly slowed down, although it is still a problem. Upon her return home to her mother and brother, she was able to walk with crutches, unassisted by others, and was given a six-month supply of medicines and supplements in hopes of improving her condition enough to undergo surgery. Yao Defen – Ill HealthYao Defen currently suffers from hypertension, heart disease, poor nutrition, and osteoporosis. Acromegaly often results from a tumor within the pituitary gland that causes excess growth hormone secretion. As a result, the body’s features become enlarged. It can also delay the onset of puberty as is the case with Yao Defen. She has no secondary sex characteristics. Potential complications without necessary surgery include blindness and eventually premature death. Yao Defen – Dead or Alive?She lives with her mother (who is only 4 ft 8 inches tall) in a small village in rural China. There are many rumours on the internet saying that Yao Defen has died. It is indeed next to impossible to find any information about Yao Defen after 2009, but I think it would have been all over the internet if she is no longer alive. So for the time being, until more information comes available, I assume she is still alive. I hope so, anyway. |